DevOps To MLOps Training In Hyderabad

with

100% Placements & Internships

- Comprehensive Curriculum

- Expert Trainers

- Real-Time Projects

- Certification

DevOps to MLOps Course In Hyderabad

Batch Details

| Trainer Name | Mr. Rakesh |

| Trainer Experience | 10+Years |

| Timings | Monday to Friday (Morning and evening) |

| Next Batch Date | 01-Dec-2025 AT 11:00 AM |

| Training Modes | Classroom & Online |

| Call us at | +91 9000360654 |

| Email us at | aimlopsmasters.in@gmail.com |

| For More Details at | For More Demo Details |

DevOps to MLOps Institute In Hyderabad

Why choose us?

- Industry-Aligned DevOps to MLOps Curriculum

- Real-Time Case Studies & Use Cases

- Expert-Led Mentorship by Certified Trainers

- Hands-On Live Project Experience

- Advanced ML Model Deployment Skills

- End-to-End CI/CD Pipeline Training

- Cloud & Multi-Platform Integration Focus

- Performance Monitoring & Automation Expertise

- Flexible Learning Modes – Online & Classroom

- Dedicated Placement Assistance & Guidance

- Post-Course Lifetime Support & Updates

- Personalized Career Growth Roadmap

DevOps to MLOps Training In Hyderabad

DevOps to MLOps Curriculum

Module 1: Introduction to DevOps & MLOps

- What is DevOps?

- What is MLOps?

- Difference: DevOps vs MLOps

- Why transition from DevOps to MLOps?

- Key tools & ecosystem overview

Module 2: Software Development Lifecycle (SDLC) & ML Lifecycle

- SDLC phases

- ML project lifecycle (data, training, deployment, monitoring)

- Challenges of ML in production

- Mapping SDLC to ML lifecycle

Module 3: Version Control & Collaboration

- Git basics (branching, merging, pull requests)

- GitHub/GitLab for collaboration

- Managing ML code vs data vs model versions

- DVC (Data Version Control)

Module 4: Containerization Basics

- What is Docker?

- Creating & running Docker containers

- Docker for ML environments

- Best practices for reproducibility

Module 5: CI/CD in DevOps

- What is CI/CD?

- Jenkins, GitHub Actions, GitLab CI

- Build pipelines in DevOp

- Automated testing strategies

Module 6: CI/CD for Machine Learning (CICD for ML)

- Differences between CI/CD and ML pipelines

- Testing ML code, data, and models

- Automated retraining pipelines

- Tools: Kubeflow, MLflow, Airflow

Module 7: Infrastructure as Code (IaC)

- IaC basics: Terraform, Ansible

- Cloud infrastructure provisioning

- Reproducible ML environments

- Multi-cloud deployments

Module 8: Cloud Platforms for MLOps

- AWS Sagemaker, Azure ML, GCP Vertex AI

- Managed vs self-managed ML platforms

- Pricing & scaling strategies

- Hybrid & on-prem ML infrastructure

Module 9: Data Engineering for MLOps

- Data ingestion pipelines

- Data cleaning & transformation

- Batch vs real-time processing

- Tools: Apache Kafka, Spark, Flink

Module 10: Experiment Tracking & Model Management

- Why track experiments?

- MLflow tracking

- Weights & Biases (W&B)

- Comparing models & metrics

Module 11: Model Packaging & Deployment

- What is model packaging?

- Building Docker images for ML models

- REST API deployment (Flask, FastAPI)

- gRPC for high-performance ML services

Module 12: Continuous Training (CT)

- What is continuous training?

- Detecting data drift

- Automating retraining pipelines

- Retraining frequency strategies

Module 13: Monitoring in DevOps vs MLOps

- Monitoring servers & apps (DevOps)

- Monitoring ML models in production

- Key metrics: accuracy, latency, drift

- Tools: Prometheus, Grafana, Evidently AI

Module 14: Feature Engineering in Production

- Feature extraction pipelines

- Feature versioning & storage

- Online vs offline features

- Feature stores (Feast, Tecton)

Module 15: Data Governance & Compliance

- Data security & privacy

- GDPR, HIPAA, SOC2 considerations

- Role-based access in ML pipelines

- Audit trails & compliance monitoring

Module 16: ML Workflow Orchestration

- What is orchestration?

- Apache Airflow for ML pipelines

- Kubeflow Pipelines basics

- Dagster & Prefect overview

Module 17: Model Serving Architectures

- Batch serving vs real-time serving

- Model inference APIs

- Serverless deployment (AWS Lambda, GCP Cloud Functions)

- Scalable serving with Kubernetes

Module 18: Kubernetes for MLOps

- Kubernetes basics (Pods, Services, Deployments)

- Running ML workloads on Kubernetes

- Helm charts for ML apps

- Kubeflow on Kubernetes

Module 19: Hyperparameter Tuning

- What is hyperparameter optimization?

- Manual vs automated tuning

- Tools: Optuna, Ray Tune

- Parallel & distributed tuning

Module 20: ML Testing Strategies

- Unit testing ML code

- Testing datasets

- Validating model outputs

- End-to-end ML pipeline testing

Module 21: Model Explainability

- Why explainability matters

- SHAP, LIME, ELI5 tools

- Explaining predictions to stakeholders

- Interpretable ML in regulated industries

Module 22: Model Fairness & Bias Detection

- Understanding bias in ML

- Metrics for fairness evaluation

- Bias mitigation strategies

- Case studies in ethical AI

Module 23: Data Drift & Concept Drift

- What is drift?

- Detecting drift in production

- Statistical & ML-based drift detection

- Drift handling automation

Module 24: A/B Testing for ML Models

- Why A/B test models?

- Experiment design

- Canary deployments

Tools for A/B testing

Module 25: Model Registry

- Centralized model storage

- Versioning models in registry

- Promoting models across environments

- Tools: MLflow Registry, SageMaker Model Registry

Module 26: Security in DevOps & MLOps

- Securing DevOps pipelines

- Securing ML data & models

- Model poisoning attacks

- Secrets management (Vault, KMS)

Module 27: Edge Deployment in MLOps

- ML at the edge (IoT devices)

- Challenges of edge inference

- TensorRT, ONNX Runtime

- Use cases in real-time analytics

Module 28: Scaling ML Pipelines

- Horizontal vs vertical scaling

- Auto-scaling ML services

- Distributed ML training

- Spark MLlib, Horovod, Ray

Module 29: Cost Optimization in MLOps

- Tracking ML pipeline costs

- Spot instances & autoscaling

- Cost-effective data storage

- Monitoring cloud bills

Module 30: Real-time ML Inference

- Streaming data processing

- Real-time feature pipelines

- Low-latency model serving

- Tools: Kafka Streams, Flink, Ray Serv

Module 31: Transfer Learning & MLOps

- Pre-trained models in pipelines

- Fine-tuning workflows

- Deployment of transfer learning models

- Reducing training costs with TL

Module 32: Deep Learning in MLOps

- Managing GPU workloads

- Scaling DL training

- TensorFlow Extended (TFX)

- PyTorch Lightning for production

Module 33: AutoML Integration

- What is AutoML?

- H2O.ai, Google AutoML, Auto-Sklearn

- Automating ML pipelines

- Trade-offs of AutoML

Module 34: Model Compression & Optimization

- Quantization, pruning, distillation

- Optimizing inference speed

- Reducing model size for deployment

- Tools: TensorRT, ONNX

Module 35: Multi-Cloud & Hybrid Deployments

- Why multi-cloud MLOps?

- Challenges of hybrid clouds

- Tools for portability

- Disaster recovery strategies

Module 36: Advanced Orchestration with Kubeflow

- Kubeflow Pipelines deep dive

- Katib for hyperparameter tuning

- KFServing for model deployment

- Advanced pipeline management

Module 37: DataOps & Its Role in MLOps

- What is DataOps?

- DataOps vs MLOps

- DataOps tools (Great Expectations, Deequ)

- End-to-end data quality pipelines

Module 38: Generative AI & MLOps

- Integrating LLMs in pipelines

- Fine-tuning GPT models

- Serving large language models (LLMs)

- Challenges in GenAI operations

Module 39: ML Observability

- What is observability?

- Metrics, logs, and traces in ML

- Tools: Arize AI, Fiddler AI

- Detecting anomalies in production

Module 40: Governance & Responsible AI

- Defining responsible AI

- AI ethics frameworks

- Governance practices in MLOps

- Regulatory compliance

Module 41: Advanced Monitoring Pipelines

- Multi-metric monitoring

- Alerting & anomaly detection

- Self-healing ML pipelines

- Case study: real-time fraud detection

Module 42: DevOps to MLOps Case Studies

- Case study: E-commerce recommendation system

- Case study: Banking fraud detection

- Case study: Healthcare predictive analytics

- Lessons from industry adoption

Module 43: Serverless MLOps

- What is serverless ML?

- FaaS in ML pipelines

- AWS Lambda, GCP Cloud Run

- Pros & cons of serverless MLOps

Module 44: API Management in MLOps

- Building scalable ML APIs

- API gateways (Kong, Apigee)

- Rate limiting & authentication

- Versioning APIs

Module 45: ML in CI/CD Pipelines – Advanced

- Advanced CI/CD workflows

- Blue-green deployment for ML models

- Rollback strategies for ML pipelines

- Multi-stage pipeline execution

Module 46: Collaboration in MLOps Teams

- Roles in MLOps: Data Engineer, ML Engineer, DevOps Engineer

- Communication best practices

- Agile & Scrum in MLOps

- Cross-functional collaboration

Module 47: Building an End-to-End MLOps Pipeline

- From data ingestion → model training → deployment → monitoring

- Toolchain selection

- Orchestration setup

- Hands-on project

Module 48: Tools Comparison in MLOps

- MLflow vs Kubeflow

- TFX vs Airflow

- W&B vs Arize AI

- Choosing the right stack

Module 49: Career in MLOps

- MLOps roles & responsibilities

- Skills required (DevOps + ML)

- Salary trends in India & abroad

- Certifications & career roadmap

Module 50: Capstone Project

- Real-world ML project deployment

- End-to-end CI/CD + CT pipeline

- Documentation & presentation

- Final evaluation & feedback

DevOps , MLOps Trainer Details

INSTRUCTOR

Mr. Rakesh

Expert & Lead Instructor

10+ Years Experience

About the tutor:

Mr. Rakesh, our DevOps & MLOps Trainer, brings over 10+ years of industry expertise in software development, cloud engineering, and AI-driven automation practices. He has collaborated with top MNCs and product-based companies across domains like finance, healthcare, and telecom, building scalable DevOps and MLOps pipelines for enterprise solutions.

He specializes in end-to-end DevOps and MLOps lifecycle, including CI/CD pipelines, containerization, cloud deployment, infrastructure as code, experiment tracking, and model monitoring. Mr. Rakesh trains students on modern tools like Docker, Kubernetes, Jenkins, Terraform, GitLab, MLflow, Kubeflow, TensorFlow, and cloud platforms such as AWS, Azure, and GCP. His teaching style emphasizes hands-on practice with real-time projects and case studies, ensuring students gain industry-ready skills.

Apart from technical expertise, Mr. Rakesh guides learners in resume preparation, certification roadmaps, interview readiness, and career mentoring. His training equips students for roles like DevOps Engineer, MLOps Engineer, Cloud Automation Specialist, and AI Infrastructure Architect, making them confident professionals in the evolving IT landscape.

Why Join Our DevOps to MLOps Institute In Hyderabad

Key Points

- Expert Trainers

Learn from industry professionals with 10+ years of experience in DevOps, Cloud, and MLOps. They bring real-world case studies into the classroom. Their mentorship ensures you gain practical insights, not just theoretical knowledge.

- Practical Training

Our program focuses on hands-on labs, real-time projects, and case studies. Instead of only learning concepts, you actually implement them. This approach helps you solve real-world challenges confidently.

- Updated Curriculum

The course is designed as per latest industry standards and trends. You will always be aligned with what companies are currently looking for. This ensures your skills remain relevant in the job market.

- Tool Coverage

Gain expertise in popular DevOps and MLOps tools like Docker, Kubernetes, Jenkins, MLflow, Kubeflow, and Terraform. Training also covers leading cloud platforms like AWS, Azure, and GCP. You’ll be job-ready with tool-based expertise.

- Flexible Learning

We provide both classroom and online training options. You can choose weekday or weekend batches as per your convenience. This flexibility ensures you can balance learning with your work or studies.

- Certification Guidance

Our trainers provide step-by-step guidance to help you crack global certifications. Mock exams, study material, and doubt-clearing sessions are included. You will feel fully prepared for certification success.

- Career Support

We assist you with resume preparation, mock interviews, and placement guidance. Our placement team connects you with top recruiters. You’ll be supported until you land your dream job.

- Affordable Fees

Get quality training at a competitive fee structure. Easy EMI payment options are also available. This makes high-quality training accessible to everyone.

- Strong Alumni Network

Join a community of successful alumni working in top companies. Network with industry professionals and gain career referrals. This support system keeps you connected even after course completion.

What is DevOps to MLOps ?

- DevOps to MLOps bridges the gap between software development and machine learning workflows. It extends DevOps principles to handle data, models, and continuous experimentation. This ensures smooth collaboration between developers, data scientists, and operations teams.

- While DevOps automates code deployment, MLOps focuses on automating the ML lifecycle. It covers data preparation, model training, validation, and deployment into production. This makes AI solutions reliable and production-ready at scale.

- MLOps adds monitoring and governance to deployed models, unlike DevOps which mainly tracks applications. It ensures models stay accurate with real-world data changes. This avoids model drift and maintains business performance.

- DevOps pipelines deal with code versioning, testing, and CI/CD. MLOps pipelines extend this with dataset versioning, feature engineering, and model reproducibility. This enables end-to-end automation for AI-driven applications.

- MLOps integrates modern tools like MLflow, Kubeflow, and TensorFlow Extended with DevOps platforms. It ensures ML models are productionized with scalability and fault tolerance. This creates reliable AI systems for enterprises.

- The shift from DevOps to MLOps empowers organizations to innovate faster. It provides a framework for collaboration between software engineers and data scientists. Ultimately, it accelerates AI adoption across industries.

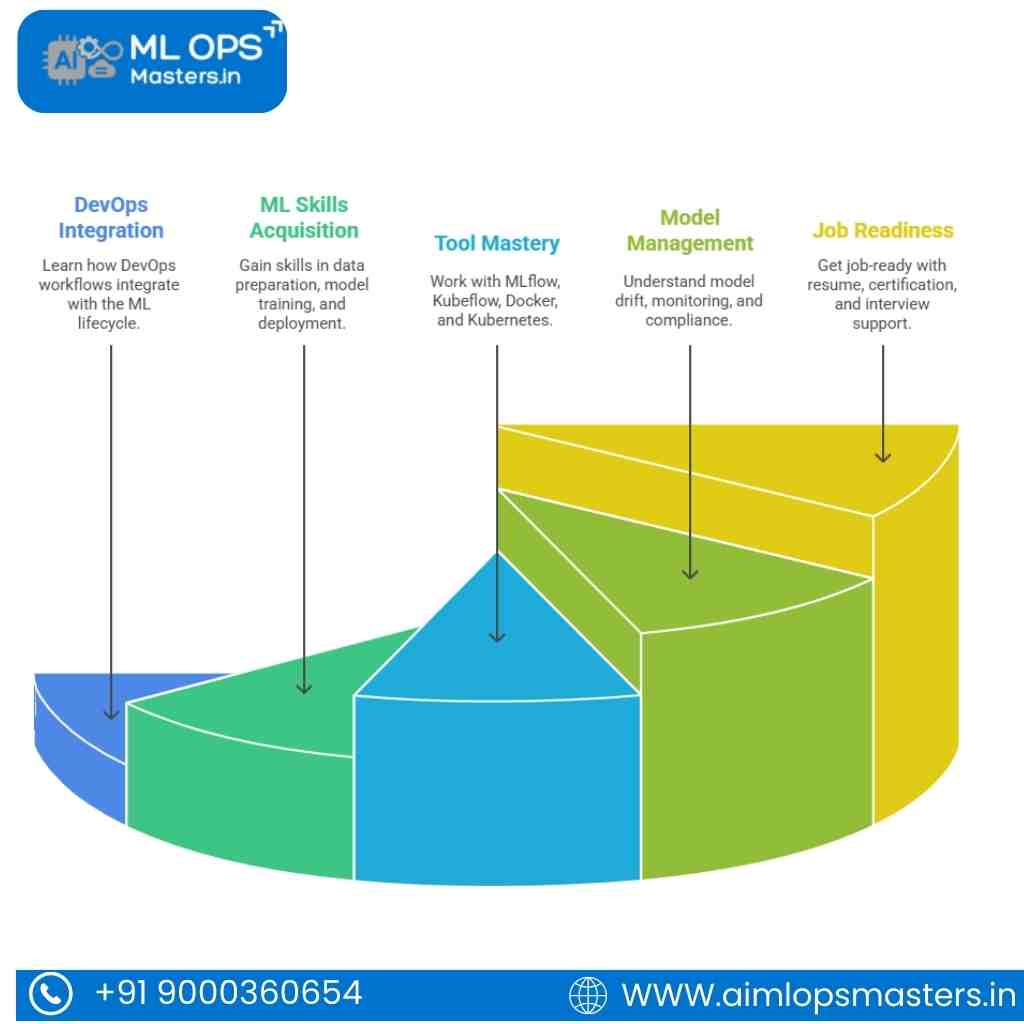

Objectives of the DevOps to MLOps Course In Hyderabad

- Learn how DevOps workflows integrate with the ML lifecycle, enabling smooth AI-driven project delivery.

- Gain skills in data preparation, model training, deployment, and CI/CD automation for ML models.

- Work with MLflow, Kubeflow, Docker, Kubernetes, and cloud platforms through real-time labs and projects.

- Understand model drift, monitoring, and compliance to ensure scalable and reliable ML operations.

- Get job-ready for MLOps Engineer and related roles with resume, certification, and interview support.

Prerequisites of DevOps to MLOps

- Basic knowledge of DevOps practices like CI/CD, version control, and automation is recommended.

- Understanding of Python programming and scripting helps in working with ML workflows.

- Familiarity with Docker, Kubernetes, or cloud platforms gives an added advantage.

- Some exposure to machine learning concepts like data handling and model training is useful.

- A willingness to learn new tools, frameworks, and real-world problem-solving is essential.

Who should learn DevOps to MLOps Course

- IT professionals and DevOps engineers who want to expand their skills into machine learning operations.

- Data scientists looking to streamline their models into production with automation and scalability.

- Cloud engineers aiming to integrate ML pipelines with cloud platforms and DevOps practices.

- Software developers interested in building end-to-end solutions combining coding, DevOps, and ML.

- Fresh graduates or career changers who want to enter the growing field of MLOps with strong job demand.

- System administrators wanting to upgrade into AI-driven monitoring, deployment, and automation roles.

DevOps to MLOps in Hyderabad

Course Outline

Understand the fundamentals of DevOps and how it extends into MLOps. Learn the key differences, workflows, and the need for automation in ML. Explore the role of DevOps culture in AI-driven projects.

Master Git and GitHub for managing ML code, datasets, and experiments. Learn collaboration practices for teams working on models and pipelines. Understand branching strategies for model deployment.

Build automated pipelines for ML models using Jenkins, GitLab CI/CD, and GitHub Actions. Integrate testing and validation of datasets and code. Ensure faster and reliable ML model delivery.

Learn Docker for packaging ML models and Kubernetes for scaling deployments. Understand how containers improve reproducibility and efficiency. Practice deploying ML services on clusters.

Use MLflow, DVC, and TensorBoard for model versioning and experiment tracking. Learn hyperparameter tuning techniques. Gain experience in monitoring performance across datasets.

Deploy models as REST APIs, microservices, and serverless functions. Explore deployment on AWS SageMaker, Azure ML, and GCP AI platforms. Learn A/B testing and canary release strategies.

Implement monitoring for ML models in production using Prometheus and Grafana. Learn anomaly detection, drift monitoring, and alerting. Ensure reliability of AI systems at scale.

Automate retraining workflows, testing, and data pipelines. Learn to scale ML workloads using cloud-native tools. Explore cost optimization while handling large datasets.

Work on real-time projects integrating DevOps and MLOps workflows. Solve business use cases with hands-on labs and cloud-based tools. Present solutions that demonstrate end-to-end automation.

DevOps to MLOps Course In Hyderabad

Modes

Classroom Training

- Daily Recorded Videos

- One - One Project Guidence

- Practical Application

- Get support till you are placed

- Mock Interviews

- Well-Organized Syllabus

Online Training

- Flexible Learning Schedule

- Recorded Video access

- Whatsapp Group Access

- Doubt Clearing Sessions

- Daily Session Recordings

- Real-world Projects

Corporate Training

- Live Project Training

- On-site or Virtual Training Sessions

- Doubt Clearing Sessions

- Daily Class Recordings

- Team-building Activities

- Video Material Access

DevOps to MLOps Coaching In Hyderabad

Career Opportunities

01

MLOps Engineer

A specialized role focused on deploying, monitoring, and automating ML models at scale. MLOps Engineers ensure seamless integration of machine learning with DevOps pipelines, maintaining efficiency and reliability.

02

Data Scientist with MLOps Skills

Data Scientists who understand MLOps can take their models beyond experimentation into production. This dual skillset opens up opportunities to work on end-to-end AI solutions.

03

AI/ML Engineer

AI/ML Engineers leverage DevOps-to-MLOps practices to build scalable and production-ready AI systems. They are in high demand across industries like finance, healthcare, retail, and e-commerce.

04

Cloud AI Specialist

Cloud providers like AWS, Azure, and GCP heavily rely on MLOps professionals for AI deployments. Cloud AI Specialists design, deploy, and optimize ML pipelines using cloud-native tools.

05

Automation and DevOps Engineer

With added MLOps expertise, traditional DevOps Engineers expand their roles into ML-focused automation. They handle CI/CD for models, infrastructure as code, and automated retraining workflows.

06

IT Operations Analyst (AI-driven Ops)

IT professionals with MLOps skills can transition into roles where AI supports operations. They manage predictive maintenance, anomaly detection, and automated incident resolution using AI-driven tools.

DevOps to MLOps Course Institute In Hyderabad

Skills Developed

Continuous Integration & Deployment for ML

You’ll master building CI/CD pipelines not just for code, but also for ML models. This ensures smooth and automated delivery of models into production environments.

Model Monitoring & Performance Tracking

Skills in monitoring deployed models, tracking drift, and ensuring accuracy over time are developed. This helps keep ML systems reliable in real-world use.

Data Pipeline Management

You will learn to design and manage scalable data pipelines. These pipelines handle data ingestion, preprocessing, and transformation critical for machine learning workflows.

Cloud & Containerization Skills

Training builds expertise in Docker, Kubernetes, and cloud platforms like AWS, Azure, and GCP. These skills help you deploy and scale ML models in production environments.

Automation & Orchestration

You’ll develop automation skills for tasks like model retraining, testing, and deployment. Orchestration tools help maintain consistency across ML workflows.

Collaboration & Workflow Integration

Strong collaboration skills are fostered, bridging Data Science and DevOps teams. You’ll learn to integrate workflows across coding, data, and production systems effectively.

DevOps to MLOps Course Online Certifications

- You will gain certifications that validate your ability to manage the complete DevOps to MLOps lifecycle, ensuring strong industry recognition.

- Certifications will cover CI/CD pipelines for machine learning, model deployment, and automation in real-world scenarios.

- You will be certified in handling modern tools like Docker, Kubernetes, MLflow, and cloud services such as AWS, Azure, and GCP.

- These certifications enhance your profile for roles like MLOps Engineer, DevOps Specialist, and Cloud ML Architect.

- Online certifications also provide global recognition, helping you secure job opportunities in both Indian and international markets.

Companies that Hire From Amazon Masters

DevOps to MLOps Course Training In Hyderabad

Benefits

- Hands-On Learning with Real Projects

The course emphasizes practical knowledge through live projects and case studies. Learners get exposure to real-world DevOps to MLOps pipelines, making them industry-ready from day one.

- Expert Guidance from Industry Trainers

Students learn directly from professionals who have real-time experience in DevOps and MLOps. This ensures training is aligned with current industry practices and demands.

- Comprehensive Curriculum

The program covers everything from DevOps basics to advanced MLOps workflows. It includes CI/CD, automation, model deployment, monitoring, and scaling ML models in production.

- Career-Oriented Training

The course prepares you for top job roles such as MLOps Engineer, Cloud ML Specialist, and DevOps-MLOps Consultant. Resume building and interview support are also provided.

- Exposure to Leading Tools & Platforms

Students work on tools like Docker, Kubernetes, MLflow, TensorFlow, AWS, Azure, and GCP. This exposure builds confidence to handle real-time enterprise projects.

- Global Recognition & Certifications

The certifications earned are widely recognized, boosting your career prospects. They open doors to opportunities in both Indian and international markets.

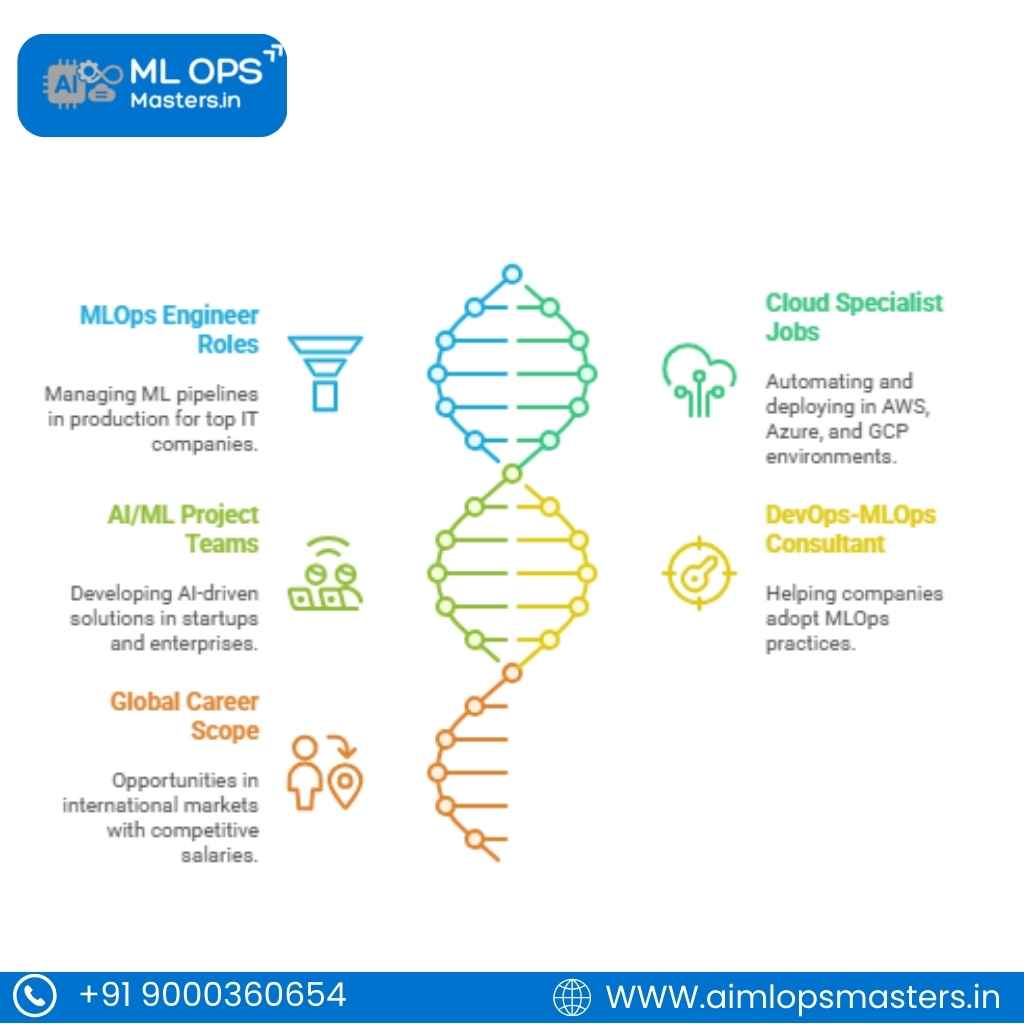

DevOps to MLOps Placement Opportunities

- MLOps Engineer Roles – Opportunities in top IT companies to manage ML pipelines in production.

- Cloud Specialist Jobs – High demand in AWS, Azure, and GCP environments for automation and deployment.

- AI/ML Project Teams – Placement in startups and enterprises focusing on AI-driven solutions.

- DevOps-MLOps Consultant – Freelance and full-time roles helping companies adopt MLOps practices.

- Global Career Scope – Openings in international markets with competitive salary packages.

DevOps to MLOps Market Trend

Rapid Adoption of MLOps in Enterprises

More companies are shifting from traditional DevOps to MLOps to manage machine learning pipelines effectively. This trend is driven by the need for automation, scalability, and faster deployment of AI models in real-world systems.

Integration with Cloud Platforms

Cloud providers like AWS, Azure, and GCP are offering specialized MLOps services. This makes it easier for enterprises to adopt MLOps practices without building everything from scratch, boosting demand.

Growing Demand for AI-Powered Automation

MLOps brings automation to model training, testing, and monitoring. With industries embracing AI, MLOps tools are becoming essential for reducing manual workloads and improving accuracy.

Rise in Model Governance and Compliance

With stricter data privacy laws, enterprises focus on explainable AI and model governance. MLOps frameworks are evolving to ensure compliance and security across industries like finance and healthcare.

Increased Collaboration Between Data Science and IT

MLOps bridges the gap between data scientists and DevOps engineers. This collaboration trend is leading to faster innovation cycles and more reliable AI solutions.

Expanding Use in Multiple Industries

Sectors like retail, finance, healthcare, and e-commerce are adopting MLOps. Each industry uses it differently, from fraud detection to personalized shopping experiences, increasing its market value.

Surge in Open-Source MLOps Tools

Open-source platforms such as MLflow, Kubeflow, and TensorFlow Extended are driving the adoption of MLOps. Businesses prefer these tools for flexibility, customization, and cost-effectiveness.

Rising Job Opportunities in MLOps

The demand for skilled professionals in MLOps is rapidly increasing. Roles like MLOps Engineer, AI Infrastructure Specialist, and Cloud AI Engineer are becoming highly sought-after

Frequently Asked questions about Market Trend

FAQs

What is the current trend in MLOps adoption?

The trend shows a rapid shift from DevOps to MLOps, as companies seek to integrate AI and ML into business workflows.

Why are enterprises moving towards MLOps?

Because MLOps helps automate model deployment, monitoring, and scaling, making AI adoption smoother and faster.

How are cloud platforms influencing MLOps trends

Cloud providers like AWS, Azure, and GCP are offering dedicated MLOps services, boosting adoption rates.

Which industries are leading in MLOps adoption?

Finance, healthcare, retail, and e-commerce are among the top industries adopting MLOps practices.

How is MLOps different from DevOps in market usage?

While DevOps manages software pipelines, MLOps is focused on machine learning lifecycle management and automation.

What role does automation play in MLOps market growth?

Automation reduces human errors, speeds up deployments, and makes scaling AI models easier, driving market demand.

Is MLOps demand growing in India?

Yes, India is seeing rapid growth in MLOps adoption, especially in IT hubs like Hyderabad, Bengaluru, and Pune.

Are open-source MLOps tools contributing to the trend?

Yes, tools like MLflow, Kubeflow, and TensorFlow Extended are pushing adoption due to flexibility and cost-effectiveness.

How do data privacy laws affect MLOps adoption?

Stricter compliance requirements push companies to use MLOps frameworks with governance and monitoring features.

Are startups also adopting MLOps?

Yes, many startups use MLOps to bring AI products to market faster and more efficiently.

What is the future market size of MLOps?

The global MLOps market is expected to grow significantly, crossing billions of dollars by 2030.

How is collaboration driving MLOps adoption?

MLOps fosters teamwork between data scientists, developers, and IT, making AI solutions more reliable.

Which job roles are in demand due to MLOps trends?

Roles like MLOps Engineer, AI Infrastructure Specialist, and Cloud AI Engineer are highly sought after.

Is MLOps limited to large enterprises?

No, even SMEs are adopting MLOps to stay competitive and implement AI efficiently

How do businesses benefit from MLOps market growth?

They gain faster innovation, cost reduction, and better customer experiences powered by AI.

What challenges slow down MLOps adoption?

Challenges include lack of skilled talent, tool complexity, and integration with existing systems.

Is MLOps adoption faster in developed countries?

Yes, but developing countries like India are catching up quickly due to IT industry growth.

How are cloud-native solutions shaping MLOps trends?

Cloud-native MLOps solutions simplify deployment, monitoring, and scaling of machine learning models.

Does MLOps improve AI model accuracy?

Yes, with continuous monitoring and retraining, MLOps ensures models remain accurate and reliable.

How do businesses measure ROI from MLOps adoption?

Through reduced costs, faster product launches, and improved decision-making with accurate AI models.

Are certifications in MLOps valuable in the current market?

Yes, certifications help professionals showcase skills and tap into rising career opportunities.

Will MLOps replace DevOps?

No, MLOps builds on DevOps. Both will co-exist, with MLOps addressing AI/ML-specific challenges.

Which companies are leading in MLOps solutions?

Google, Microsoft, AWS, IBM, and DataRobot are among the leaders.

What impact does AI growth have on MLOps?

The AI boom fuels the need for robust MLOps pipelines to manage large-scale deployments.

Are MLOps tools expensive?

Some are, but many open-source tools make it affordable for small and mid-sized companies.

Is MLOps relevant only for technical teams?

No, it impacts business teams too by enabling faster insights and decision-making.

What skills are trending for MLOps professionals?

Cloud computing, Kubernetes, MLflow, CI/CD, Python, and deep learning frameworks are in demand.

Can MLOps improve customer experience?

Yes, businesses can deliver more personalized and faster services using AI managed through MLOps.

What is the long-term market trend for MLOps?

It’s projected to see double-digit growth annually, with widespread adoption across industries.

How do placements benefit from MLOps market trends?

Rising demand for MLOps ensures abundant job opportunities with attractive salaries worldwide.